‘Ex Machina’ and the theory of consciousness

The sci-fi thriller Ex Machina (2015), directed by Alex Garland, has put into limelight the problem of consciousness: whether a machine can spontaneously develop a mind, mental states, and consciousness. Everyone recalls that IBM’s Deep Blue beat the chess world champion in 1997 and IBM’s Watson won the quiz show Jeopardy in 2011. However, some neuroscientists do not think that an artificial neural network (ANN) with a number of neurons as large as a human brain may be enough. In fact, the cerebellum has more neurons and appears to be as complicated as the cerebral cortex, but hardly affects consciousness, even after its complete removal.

Consciousness in the brain of healthy adult can be assessed in laboratory experiments by combining behavioral reports with magnetoencephalography (MEG), functional brain imaging in magnetic scanners, or electroencephalography (EEG), high-density electrode recordings from outside the skull. These techniques are aimed to discover the relation between behavioral (BCC) and neural correlates of consciousness (NCC), the minimal behavioral and neural mechanisms that are jointly sufficient for any one conscious percept. Popular NCC candidates in the two cerebral hemispheres include the strong activation of higher order frontoparietal cortices, the high frequency electrical activity in the gamma range (35-80 Hz), and the occurrence of an EEG event known as the P300 wave. However, there is still no consensus on whether any of these signs can be treated as reliable “signatures” of consciousness, but they are currently used to complement the behavioral assessment and diagnosis in patients.

In order to explain why some neurons in the brain are involved in consciousness and others are not a theory of consciousness is needed. Giulio Tononi, from University of Wisconsin, Madison, USA, proposed in 2004 such a theory, the so-called Integrated Information Theory (IIT) or theory, which states that consciousness is the amount of integrated information generated by a network of connected elements that causally relates sources of information, the so-called complex 1. The IIT version 3.0, published in 2014, is based in five axioms 2: existence, composition, information, integration, and exclusion. In Tononi’s opinion these axioms are self-evident. Every physical mechanism which acts as a complex must fulfill five postulates associated with each axiom.

Let me briefly present the five axioms of IIT. For more details I strongly recommend the recent expository paper 3 on the current state of IIT by Tononi and Christof Koch, from Allen Institute for Brain Science, Seattle, WA, USA. First, the axiom of existence claims that consciousness exists. Everyone experiences it independently of any other. The associated postulate of existence states that consciousness has a cause, it is generated by a system of mechanisms in a given physical state. Hence, in principle, even a machine can be conscious.

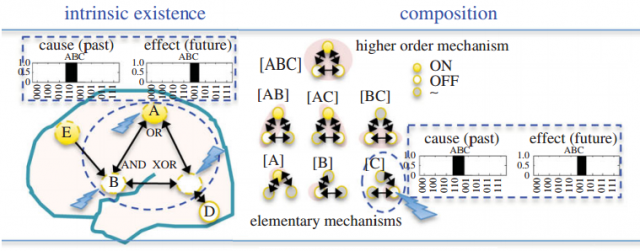

The second axiom, composition, says that consciousness is an experience composed of many phenomenological distinctions. The postulate of composition obviously states that a conscious system can be divided into a network of interconnected elements. These elementary mechanisms can form distinct higher order mechanisms, i.e., the network can be compartmentalized. The grouped mechanisms are considered distinct only if they cannot be reduced to those of its constituent elementary mechanisms.

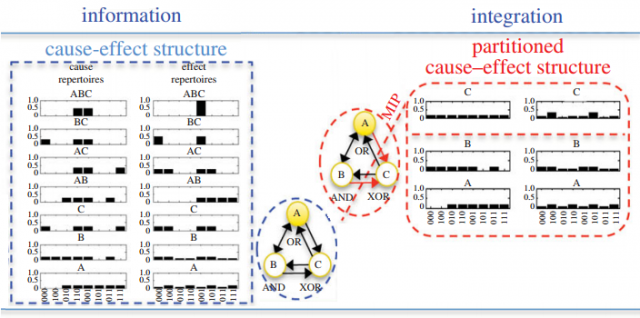

The axiom of information is the third one, stating that each conscious experience is different from other possible experiences. The corresponding postulate claims that a specific composition of concepts differs from other possible ones. Here, the concept of information in IIT is not exactly the same as Shannon’s one, which is measured by entropy (the expected amount of information contained in a message). Tononi uses a neuroscientific language, defining information associated with experiences (qualia), such as color and shape, which cannot be experienced separately. This kind of information can be integrated, i.e., experienced in a unified experience (in Tononi’s words). This notion of information differs from the one used in common language. It refers to how a system of mechanisms in a given state rises to a form (informs) in the space of all possibilities (qualia space).

Apparently, IIT proposes two layers for the flow of information in a conscious system. The lower layer is the usual flow of Shannon’s information among its components. The upper layer is the causal relation among the components connected together in groups associated to the same experience (this kind of information also flows in space and time, but in an independent way to the other one). In my opinion, the rhetorical language used by Tononi to express his ideas is difficult to understand for a physicist or a mathematician like myself. As I understand it, in ITT there are two types of information, the one that can be integrated and the other that cannot be integrated. Consciousness is associated only with the information that can be integrated, although in communication theory, computer science, and artificial intelligence only the non-integrated information is currently used.

The fourth axiom, integration, states that consciousness is unified, i.e., that each experience is irreducible to non interdependent components. For example, seeing a red triangle is irreducible to seeing a gray triangle plus the disembodied color red. The postulate of integration claims that the conceptual structure specified by the system is irreducible to that specified by non interdependent subsystems. The concept of integrated information is defined as the information which characterizes such conceptual structure.

The fourth postulate recalls to me the idea of ontology 4 as used in the semantic web 5. An ontology asks what kinds of things exist or can exist in a closed world, and what manner of relations can those things have to each other. From the practical point of view an ontology is a set of nouns (things) connected by some verbs (actions). Using this language the concept of integrated information corresponds to the causal relations between the elements of the system which results in the phenomena. Tononi introduces the so-called qualia space (Q), which characterizes the quality of the experiences. The integrated information is associated to the causal relations among the elementary mechanisms connected together to form a shape within the Q space, being the shape itself the description of the qualia that is experienced [2].

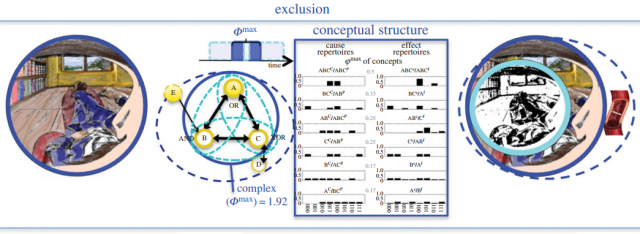

The last axiom, exclusion, says that consciousness is singular, not being the result of the superposition of multiple experiences. The postulate of exclusion states that there can be no superposition of conceptual structures over elements, even in spatiotemporal terms. Tononi introduces the concept of irreducibility in order to define the so-called complex, the maximally irreducible conceptual structure of the system. Consciousness flows in space and time at a given speed, neither faster nor slower. As illustrated in Fig. 4, the experience I am having is of seeing a body on a bed in a bedroom, a bookcase with books, one of which is a blue book, but I am not having an experience with less content, say, one lacking the phenomenal distinction blue/not blue, or colored/not colored; nor am I having an experience with more content, say, one endowed with the additional phenomenal distinction high/low blood pressure.

Tononi claims that his five axioms and postulates allow the quantification of the amount of consciousness of a human subject in a laboratory experiment; in fact, he has applied IIT to patients. The current mathematical formulation of IIT uses the concepts of relative entropy and effective information [3]. However, the association of integrated information to qualitative properties of phenomenological experiences observed in neuroscientific experiments is greatly subject to the interpretation of the researcher.

In my opinion, the formulation of the axioms and postulates in IIT is vague, with more literature (in some cases near to poetry) than usual technical literature. But Tononi’s claim that there are experimental predictions made by ITT confirmed using neuroimaging. For example, the loss and recovery of consciousness should be associated with the breakdown and recovery of the brain’s capacity for information integration. Tononi claims the confirmation of this prediction by means of using transcranial magnetic stimulation (TMS) in combination with high density EEG in conditions characterized by loss of consciousness (like deep sleep, general anesthesia obtained with several different agents, and brain damaged patients, vegetative, minimally conscious, emerging from minimal consciousness, locked in). For me, this is a clear example of the confirmation bias, Tononi has a tendency to interpret the results in a way that confirms his own hypotheses.

There are several counterintuitive predictions of IIT. For example, neurons in the cerebral cortex only contribute to consciousness if they are active in such a way that they broadcast the information they represent. If some neurons were pharmacologically inactivated, they would cease to contribute to consciousness, even though their actual state is the same. They would lack a cause effect repertoire and thus would not be able to affect the states of the complex. Tononi interprets some experiments using optogenetics as a clear confirmation of these ideas.

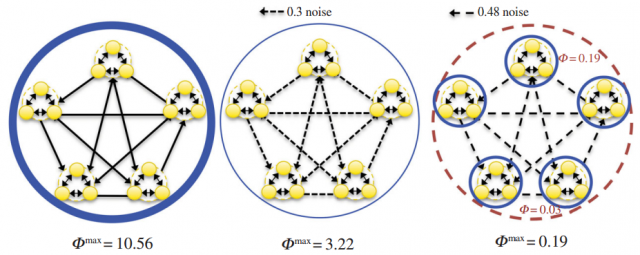

IIT offers an explanation for why is consciousness generated by the cerebral cortex (or at least some parts of it), but not by the cerebellum. The cerebral cortex, responsible for the content of consciousness, is that it is comprised of elements that are functionally specialized and at the same time can interact rapidly and effectively. Instead, the cerebellum is composed of small sheet like modules that process inputs and produce outputs largely independent of each other. Neural aggregates have no consciousness.

In fact, complicated neural systems can be unconscious. According to IIT, a feed forward artificial neural network whose output depends only on the input imposed from outside the system only can carry out tasks unconsciously. Even if they were able to replicate the input/output behavior of the human brain in such a way to behave indistinguishable from a human, being capable of passing any Turing test. Tononi and Koch [3] claim that there cannot be an ultimate Turing Test for consciousness. Simulations of conscious neural systems can be unconscious.

Tononi and Koch [3] vindicate that consciousness is common among biological organisms (at least in mammals), but digital computers, even if they were to run faithful simulations of the human brain, would experience next to nothing (in authors’ wording). In IIT a big enough ANN will spontaneously develop a behavior similar to a cerebellum. It can pass Turing tests, but this is not enough. Only a properly designed ANN to simulate the conscious states of the cerebral cortex can act as a complex, i.e., like the artificial brain of the humanoid robot named Ava in Ex Machina.

References

- Giulio Tononi, “An information integration theory of consciousness,” BMC Neuroscience 5: 42 (2004), doi: 10.1186/1471-2202-5-42. ↩

- Masafumi Oizumi, Larissa Albantakis, Guilio Tononi, “From the phenomenology to the mechanisms of consciousness: integrated information theory 3.0,” PLoS Computational Biology 10: e1003588 (2014), doi: 10.1371/journal.pcbi.1003588. ↩

- Giulio Tononi, Christof Koch, “Consciousness: Here, There but Not Everywhere,” Philosophical Transactions of the Royal Society B 370: 20140167 (2015), doi: 10.1098/rstb.2014.0167; arXiv:1405.7089 [q-bio.NC] ↩

- Thomas R. Gruber, “Toward Principles for the Design of Ontologies Used for Knowledge Sharing,” International Journal Human-Computer Studies 43: 907-928 (1993). http://goo.gl/fjzJgp ↩

- Tim Berners-Lee, James Hendler, Ora Lassila , “The Semantic Web,” Scientific American Magazine (May 17, 2001). http://goo.gl/i34fsk ↩

4 comments

[…] contribución “‘Ex Machina’ and the theory of consciousness,” al blog Mapping Ignorance, 01 Jun 2015. El primer párrafo, en inglés, dice “The sci-fi thriller Ex Machina (2015), […]

[…] ¿Qué es la consciencia?¿Cómo surge?¿Puede una máquina llegar a ser consciente?¿Cómo podríamos determinar que es consciente? La teoría de Giulio Tononi es muy prometedora, pero Francisco R. Villatoro le encuentra sus pegas en ‘Ex Machina’ and the theory of […]

[…] sucede en otra película del mismo año, Ex Machina, donde se efectúa la fantasía de generar una conciencia completa totalmente autorreferencial, que puede aprender mediante la acumulación de información (recuerdos concientes e inconcientes); […]

[…] There’s so much to choose from in the genre — including “Transcendence,” “Blade Runner,” “Inside Out,” “Lucy,” and “Upload.” But Koch says his favorite is “Ex Machina,” the 2014 film in which a sexy synthetic human develops a mind […]