An evidence-based educational strategy to reduce illusions of causality

Imagine you are invited to a demonstration at the local shopping center. You are shown a new product, a little ring made of a very special material that has been developed in a highly regarded research center. The scientists who are describing the ring provide all types of information and answer your questions convincingly and clearly. They offer you and other people the possibility to test the properties of the ring. It is designed to have an appreciable impact in both your physical and cognitive abilities. You and other people accept. You first test its cognitive-enhancing properties. Each of you takes a ring. It shines, it is beautiful. The scientists ask you to hold it tight in your left hand while you perform the exercises. You begin completing a series of attention, memory, and other tests, quite rapidly. Most of you agree that the product has improved your speed and accuracy, that you have been able to work faster and better than you usually do. After that, you and the other people try a few physical exercises suggested by the scientists leading the demonstration. The first time you try these exercises without the ring; the second time, and for comparison purposes, you are allowed to wear the ring. This second time, with the ring, your performance is clearly better than the first time. There is no doubt the ring works. You all are now ready to buy it.

Unless…

Unless you have received a good scientific education and you have been able to detect the tremendous flaws in the demonstration. When you first performed the cognitive tests you and your peers were all holding the ring. Thus, there is no way you could appreciate the power of the ring because you do not know what the difference between performing the exercises with the ring and without the ring is. The ring seemed to work – probably due to a placebo effect- but the truth is you cannot tell; you do not know what your baseline is for these tests. Then, if we look at the conditions under which the subsequent physical exercises were performed, those were even worse. The first time all of you performed the physical exercise without the ring. The second time, much more successful, all of you were wearing the ring. Thus, once again, there are alternative explanations for your abilities that need to be considered. As an example, the effect of practice could easily explain why the second time was better. Therefore, the intuitive and easy feeling that the ring was working could be just that, a feeling, an impression… and quite possibly a cause-effect illusion. In neither the cognitive nor the physical exercises did you use an adequate control condition which would allow you to discriminate between a real and an illusory causal relationship. Therefore no evidence supporting the product was really shown. Even so, most people would be willing to buy the ring just because it seemed to work for them. Causal illusions are a form of cognitive biases and are a serious problem which affects the way many people make important decisions in their life.

Causal illusions are often at the heart of pseudoscientific practices (those which appear to be scientific but lack any scientific evidence supporting them) and are often guiding the decisions of today’s citizens in areas as diverse as finance, health, justice, and education to name a few. A particularly worrisome example is that of alternative medicine (i.e., those medical treatments which, like the ring in our story, have not shown to be any better than placebo). As some have argued, causal illusions and similar cognitive biases are also one of the main causes for prejudices, intolerance, war, and crimes in this planet (see, e.g., Lilienfeld, 2007). Quite surprisingly, however, very little evidence exists on how these biases could be reduced.

With this in mind, Barberia, Blanco, Cubillas & Matute (2013) 1 conducted an experiment designed to develop and test a strategy to debias adolescents against causal illusions. The teenagers (mean age 14 years old) were randomly assigned to one of two groups. Those in the experimental group were first made believe that a little piece of regular ferrite could enhance their cognitive and physical abilities. Using several exercises similar to those described above, the teenagers were convinced that the product was working beautifully and was improving both their physical and cognitive abilities. By the end of the demonstration many of them reported that they would have bought the piece of ferrite. They were then shown the problems of such thinking, which was based on personal impressions. They were also shown the need to conduct controlled tests with and without the product so that they could detect whether it had any power. Thus, they received an educational workshop equivalent to the one described in the first two paragraphs above: They were first fooled so that they could be aware of their own biases, and then they were shown why it would have been a great mistake to buy the ring. By the end of the demonstration they had certainly understood their own biases and could not believe they had been fooled so easily. They also understood how they could have performed or requested better tests of the product’s powers. This was assessed in a subsequent test phase.

The efficacy of this workshop was assessed using a subsequent generalization test which was very different from the first phase. This test used a computer program which is widely used in experiments on causal judgments and causal illusions. During this test the task of the students was to heal a series of fictitious patients who appeared in the computer screen, one by one. They all suffered from the same disease. In each trial, the students could administer (or not) a fictitious medicine called Batatrim to the current patient and observe the results. The fictitious patients showed a high rate (75%) of spontaneous recovery from their disease. The sequence of spontaneous recoveries had been programmed in advance, so it was absolutely independent of whether the students administered the treatment to any specific patient. Thus, the treatment was absolutely innocuous but the students were not informed about this. Once all the patients had been observed the students were asked to estimate to what degree they believed the medicine to be the cause of the healings.

Previous research using this fictitious-patients task has shown that most people tend to develop an illusion of causality under those conditions. In Barberia et al.’s experiment, the control participants had not received the educational workshop when they completed this test, so they provided the base-line for the illusion causality (due to ethical considerations they were also offered the workshop, but once the experiment was completed). The only difference between them and the experimental participants was that the experimental participants performed the fictitious-patients test once they had already completed the educational workshop. Experimental participants were therefore expected to do better than control participants.

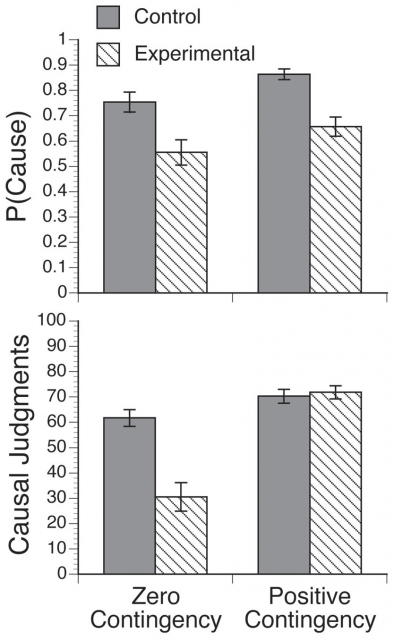

The results are summarized in Figure 1. Like in the previous experiments using this task (see Barberia et al.’s, 2013, for references of previous research), the high rate of spontaneous remissions produced a strong illusion of causality in the control group. That is, control participants developed an illusion that the medicine was effective. By contrast, experimental subjects (those who received the workshop before the causal-illusion assessment) showed a significantly reduced illusion that the medicine was causing the healings.

Thus, the intervention developed by Barberia et al. (2013) was effective in reducing the illusion of causality. It taught the experimental participants how to think critically and how to use the principles of scientific control rather than jumping to easy conclusions based on impressions, feelings, and cognitive biases. Moreover, the positive effects of this educational strategy transferred to a different task which required the use of the same basic scientific principles. The students who had undergone the workshop performed better tests of the medicine during the assessment task and arrived at more accurate conclusions concerning the degree of efficacy of the medicine. The control participants, however, showed the default behavior and illusion: they tended to administer the medicine to a large number of patients and were not able to detect that the patients would have recovered equally if they had not taken the pill.

This is the first evidence-based educational strategy which has been designed to reduce an illusion of causality. The integration of this strategy in larger educational programs should help people recognize their own biases and reduce them. This would certainly contribute to the construction of a so-called knowledge-based society (i.e., that which bases its decisions on knowledge rather than on subjective impressions and cognitive illusions).

2 comments

Muy buen artículo Helena, lo tendrás en español ??

Gracias Luis. Yo no lo tengo en español, pero publicó una entrada excelente en español sobre este tema Javier Peáez en http://aldea-irreductible.blogspot.com.es/2013/09/la-enorme-necesidad-de-una-asignatura.html y también Miguel A. Vadillo en http://mvadillo.com/2013/09/05/como-ensenar-el-pensamiento-critico-el-valor-de-la-investigacion-basica/

Espero que te sea util.

Saludos!