The illusion of control: Cognitive bias or self-esteem problem?

The illusion of control: Cognitive bias or self-esteem problem?

The illusion of control rests at the heart of superstitious and pseudoscientific beliefs. It is a belief that we are controlling events which are actually occurring independently of our behavior. This is a very common illusion that occurs in most people, particularly when desired events occur frequently though uncontrollably. Examples abound not only in real life but also in the psychological laboratory. It can be produced in experimental participants using points in a videogame, lights and tones that turn on or off independently of the participant’s attempts to control them, spontaneous remissions of pain in fictitious patients in a computer game, or fictitious stock prices that can rise or not as a function of several potential (and fictitious as well) causes. In all these cases people tend to think that they have control over the events they are trying to obtain even when those events have been programmed to occur following a predetermined sequence. In real life this often leads to decision-making based on coincidences and cognitive illusions rather than contrasted knowledge. It affects areas as diverse as health, finance, or education. This illusion rests at the heart of pseudoscientific practices and superstitious thinking.

A question of interest is why this illusion occurs. According traditional accounts, the illusion of control is due to a need to protect self-esteem from the negative consequences of the loss of control. It is a defense mechanism that makes us all feel in control and maintain our self-esteem when we face events that we cannot control. More recent accounts, however, have suggested that the illusion of control is the result of the way our cognitive system works when associating causes and effects. In this framework, the illusion is a normal cognitive effect, not a motivational defense mechanism. Because our cognitive system needs to constantly associate possible causes to possible effects, and because there are conditions in which desired events occur with high probability, these events often become associated to our behavior as its most probable cause. This is particularly likely to occur when we are acting with high frequency in order to obtain those events. In these cases the number of coincidences between our behavior and the desired events is high, and the connection between them results strengthened even when their co-occurrences are due to mere chance.

The motivational and the cognitive accounts make different predictions. On the one hand, if enhancing self-esteem is necessary in order to develop the illusion of control, then the illusion of control should occur when self-esteem is at risk. Thus, it should occur when the experimental participants are the agents (that is, they are personally involved in obtaining the event, so their behavior is a potential cause). In those cases, realizing that they are not being able to control the occurrence of the desired event may threaten their self-esteem, and thus these cases should be subject to the illusion of control according to the motivational account. The illusion should not occur when the participants are mere observers of the cause-effect relationship.

On the other hand, according to the cognitive proposal, the number of coincidences between cause and effect is what counts. Thus, the higher the probability which with the cause occurs, the strongest the illusion should result (assuming, of course, that the illusion tends to occur under conditions in which the desired event is occurring frequently as well; thus the two of them will be likely to coincide). This should happen regardless of whether the experimental participant is a mere observer or an active participant. Understanding how the illusion occurs is important if we are to reduce its impact.

A recent series of experiments by Yarritu, Matute and Vadillo (2013) 1 has confronted those two accounts. They used a variation of a fictitious medical task which is often used in the study of causal learning and causal illusions. Experimental participants were randomly assigned to one of two groups: active and yoked. Both groups were exposed to the medical records of 100 fictitious patients who appeared in the computer screen. All patients suffered from the same fictitious syndrome. Yoked participants did nothing but observe what their counterpart active subject was doing. The task of the active participants was to help their fictitious patients recover from their disease. In each trial, each active participant could give (or not) a medicine to each of the fictitious patients and then observe the result. Each yoked participant was observing the screen of one active participant. The frequency of spontaneous recovery was programmed to be high (80%). That is, even if the active participants decided not to administer the medicine to any of the fictitious patients, 80% of them would recover anyway. The participants were not informed about this feature of the design. According to previous results using this task, active participants would probably tend to administer the medicine to a high number of patients, and would probably develop a high illusion of control.

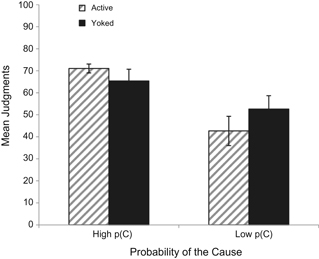

Thus, in order to test whether the influence of the probability of the cause (in this case, the probability with which the active participants administered the medicine) affected the development of the illusion, Yarritu and colleagues manipulated the number of doses that the active participants had available. Half of the active participants had 7 doses for every 10 patients; the other half had 3 doses. Thus, the probability with which these subgroups administered the cause was near 0.7 in group high p(C) and near 0.3 in group low p(C).

At the end of the experiment all participants were asked to rate the degree to which they thought the medicine was the cause of the recovery of the fictitious patients. As previously mentioned, the medicine was absolutely ineffective: the probability of healing was .80 regardless of whether a patient received the medicine or not. If the probability of the cause is an important variable in producing the illusion, as the cognitive account proposes, then the high p(C) group should show a stronger illusion than the low p(C) group. And this should be independent of whether the participants belong to the active or the yoked (i.e., observer) group. On the other hand, if what is important is the level of personal involvement and the need to protect self-esteem, then only active participants should develop the illusion. They were not only trying to heal as many patients as possible, but they were also being observed by their corresponding yoked participant. So, their self-esteem was at risk. Yoked participants, by contrast, were mere observers, their self-esteem was not threaten.

As can be seen in Figure 1, the results showed that the strongest illusion occurred in those participants in which the cause (whether their own behavior or that of their partners) occurred with high probability. This supports the idea that the illusion of control occurs in the same way as the learning of any other cause-effect relationships. Given that the effect occurs frequently, if the cause occurs frequently too, a high number of coincidences strengthen the cause-effect association and this produces a feeling of control. This feeling is often the result of a true cause-effect relationship but it can also occur when these coincidences are accidental. In these occasions the relationship is perceived as a real one, though it is just illusory. Discriminating between the two requires effortful testing. It requires testing both what happens when we introduce the cause and when we do not, something that most people are not usually ready to test spontaneously. In this experiment, the low p(C) group was in some way forced to perform that test and to learn what happened when they did not administer the medicine. In this way they were able to learn that the medicine was absolutely innocuous, something the high p(C) group did not learn. The interesting point is that those who were in the yoked (observer) group learned exactly the same illusion (or non-illusion) than their counterpart active participants. Thus, the results suggest the illusion seems to be determined by the observed cause-effect coincidences rather than by a need to protect self-esteem.

This research has important implications in the development of strategies to reduce the illusion of control. It suggests that the illusion should best be addressed as a systematic cognitive bias in the detection of causal relationships rather than as an emotional or motivational problem. Indeed, some educational programs are already being tested successfully following the suggestions from the cognitive approach (see Barberia, Blanco, Cubillas & Matute, 2013). This is not to say that emotions and self-esteem can play no role in the development of these illusions, but cognitive biases have shown to be critical and this can facilitate enormously the development of educational strategies to prevent the proliferation of these illusions world-wide.

References

- Yarritu I., Matute H. & Vadillo M.A. (2013). Illusion of Control, Experimental Psychology (formerly Zeitschrift für Experimentelle Psychologie), 1 (-1) 1-10. DOI: 10.1027/1618-3169/a000225 ↩

1 comment

[…] The self-control problem is a cognitive bias in which individuals struggle to resist immediate temptations in favor of longer-term goals. This bias is rooted in the idea that the human brain has two systems for decision-making: one that is […]