Soft- bounded synapses increase neural network capacity

Soft- bounded synapses increase neural network capacity

Neurons in your brain are interconnected through synapses, thus constituting the so-called neural networks. Following a simplified but conceptually useful view, each synapse can be seen as a “link” between two given neurons, and it has a certain associated strength. Such a strength gives an idea of how reliable a given synapse is in order to transmit information from one neuron to the other. For instance, a strong synapse may transmit signals from one neuron (the presynaptic neuron) to another one (the postsynaptic neuron) in a highly reliable fashion. On the other hand, if the synapse is weak, a significant number of failures in information transmission would occur.

It is usually assumed that memory is somehow encoded in the strength of the synapses. More than half a century ago, the Canadian psychologist Donald Hebb already established the basic notion of this with his famous rule 1, which states that neurons which fire at the same time tend to form stronger synaptic connections than those which do not. Since then, much experimental work has been dedicated to understand this synaptic reinforcement, better known as synaptic plasticity (leading to the discovery of spike-timing dependent plasticity 2, for instance), while theoreticians developed models that would explain the emergence of memory as a consequence of the Hebb’s rule 3.

As more detailed biophysical mechanisms are found by experimentalists, synaptic plasticity becomes better understood, and new ingredients can be considered in the theoretical models. For instance, attending to the brief explanation given above on the Hebb’s rule, one could think that if two given neurons keep firing together for long enough, the strength of the synapse linking them would rise to enormous values. This is of course an unrealistic assumption, since the strength of a synapse has to be naturally constrained to a certain range of biophysically plausible values. Such a constrain and the effects that it might have in the memory properties of a neural network, however, are not well understood.

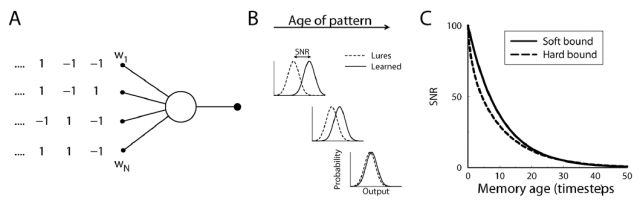

Recently, researchers from the Institute for Adaptive and Neural Computation in Edinburgh (UK) have introduced state-of-the-art synaptic constrains to investigate their effects on the ability of a neural network to store and retrieve information 4. Based on previous experimental findings, van Rossum and his collaborators have considered that synaptic plasticity not only depends on the firing of presynaptic and postsynaptic neurons, but also on the strength of the connection when the plasticity is occurring. This is equivalent to take into account that not all synapses will vary their strength in the same way. Weak synapses, for instance, can be reinforced easily, but already strong synapses will have a harder time to become even stronger, and the relative increment in their strength will be low.

Neural networks presenting these constrained synapses, which are referred as soft-bounded synapses, were studied by employing numerical simulations and theoretical calculations. Surprisingly, soft-bounded synapses not only allow to preserve the memory capacities of the network, but they even improve the performance of the network at memorizing and retrieving previously stored information. Using information theory measurements, van Rossum and his collaborators found out that the information capacity of neural networks with soft-bounded synapses is about 18% higher than in the case of classical, hard-bounded synapses (where a strict range of synaptic strengths is considered, and the plasticity only depends on the presynaptic and postsynaptic activity). This improvement was found for different dependencies of the plasticity with the synaptic strength, thus suggesting than a large set of soft-bound synaptic plasticity rules display this improved performance.

Of special interest is that the improvement in information capacity is particularly strong for the case in which the information was recently stored in the network. Patterns of information which were memorized long ago in the simulations did not display the 18% improvement. This highlights a positive role of soft-bounded synaptic mechanisms in the optimal retrieval of recent memories, but indeed older memories are still better treated in the case of soft-bounded synapses. As van Rossum and colleagues showed, the typical life-time of a given memory is about a 25% larger for soft-bounded synapses than in the case of hard-bounded synapses.

Contrary to what one might think a priori, the consideration of realistic constrains in a neural network model may improve its performance instead of declining it. In the presented case, improvements up to 18% in information capacity and 25% in memory lifetimes were obtained just by introducing some level of biological realism in the synaptic connections. This findings will be useful to understand the way our brain stores and retrieves memories, and they may be also useful to build better classification and recognition algorithms, being a large group of them based on the Hebb’s rule and the neural network paradigm. Whether some other realistic effects might have an even larger impact on memory capacities of biologically realistic neural network models is something further research will have to address.

References

- D. O. Hebb, The organization of behavior. Wiley NY, 1949 ↩

- H. Markram, J. Lubke, M. Frotscher and B. Sakmann, Regulation of synaptic efficacy by coincidence of postsynaptic Aps and EPSPs. Science, 275, 213-215, 1997 ↩

- J. J. Hopfield, Neural networks and physical systems emergent collective computational abilities, PNAS, 79, 2554-2558, 1982. ↩

- M. C. W. van Rossum, M. Shippi and A. B. Barret, Soft-bound synaptic plasticity increases storage capacity. PLoS Computational Biology, 8 (12) e1002836, 2012. ↩