Brain mechanisms beneath prediction during speech perception

Brain mechanisms beneath prediction during speech perception

Author: Nicola Molinaro is an Ikerbasque Research Fellow at BCBL – Basque Center on Cognition, Brain and Language

Research on language comprehension has always underscored the speed and precision with which the brain integrates and process the input while at the same performing multiple parallel tasks. This extreme efficiency is explained by the ability of our mind to benefit from the contextual information thus reducing the burden on the perceptual system that is constantly facing the entropy of the external environment. More specifically, it has been shown that our perceptual systems continuously estimate the incoming sensory signals before those signals actually interact with our senses. This “predictive” attitude of the neural system has been proposed to be the basic processing algorithm through which the human brain approaches reality.

Monsalve, Bourguignon & Molinaro 1 evaluated the reliability of the predictive process during speech perception. In fact, while the predictive hypothesis is being integrated for studying many cognitive skills, language has been always considered an exception for two main reasons. First, language is very “productive”: thanks to the combination of a relatively low number of words humans can represent almost any intended meaning, and the same meaning can be expressed in multiple ways. This would make language highly variable and difficult to predict. Second, given the highly symbolic and abstract nature of words, it would be very hard to directly map these mental representations onto the perceptually detailed input coming from the external reality.

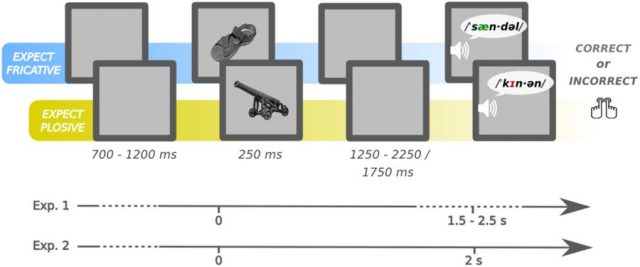

However, Monsalve and colleagues challenged these dogmas in a magnetoencephalographic (MEG) study. They were interested in verifying if the auditory brain regions showed differential activation for the prediction of different speech stimuli in a time interval preceding the onset of the acoustic linguistic stimulus. Participants saw pictures followed by the corresponding auditory words that participants had to attend. Starting around one second before the delivery of the auditory stimulus, the bilateral auditory regions showed a differential pattern of oscillatory activity around 6-7 Hz that were related to the acoustic pattern of the incoming words (more specifically, of their initial syllable). This effect has been observed in two experiments in which different time parameters were manipulated. In the first experiment, the predicted word could appear at a variable time, while in the second experiment the predicted word appeared always after a fixed delay. The authors observed an increased attentional demand for the first but not for the second experiment as evidenced by the involvement of attention-related left-hemisphere parietal regions. Importantly, however, the oscillatory effect in the auditory regions was similar for both experiments.

The present results highlight the potentiality of our brain to build up detailed sensory representations based upon internally generated linguistic knowledge. This finding brings attention to the extreme adaptive attitude of our brain that is able to convert an abstract symbolic representation into a sensory detailed percept that, in turn, will constrain the perceptual integration of the information coming from the external input.

This dynamic is at the basis of our ability to speed up the speech comprehension process. The brain in a few hundreds of milliseconds is able to capture the intended meaning of any utterance. This fast process is heavily supported by our ability to anticipate the incoming information at multiple levels. While language researchers mainly proposed that such anticipation would mainly operate on abstract symbolic representations, these new findings clearly indicate that the brain can already “pre-perceive” an auditory stimulus. We are now evaluating if this perceptual ability is also evident during reading, i.e., if the visual system is able to anticipate the visual properties of a printed word when expecting it.

References

- Monsalve, I.F., Bourguignon, M., & Molinaro, N. (2018). Theta oscillations mediate pre-activation of highly expected word initial phonemes. Scientific Reports, 8:9503. doi: 10.1038/s41598-018-27898-w ↩

1 comment

[…] Zer egiten du garunak entzuten ari den bitartean erantzuna prestatzeko? Nicola Molinaroren Brain mechanisms beneath prediction during speech perception […]