Your mind will never be uploaded to a computer

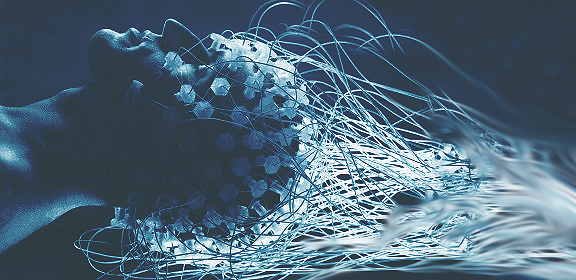

One of the most popular ideas within the new and fashionable transhumanism craze is that, in the future humans, will be able of reaching a kind of ‘immortality’ by decoding the information contained in your brain, ‘uploading’ it to some kind of memory device, and making it live on a computer (see, e.g., Seung 2012). Perhaps, they say, by when you die it will already be possible to freeze your brain and keep it in a kind of suspension state till the technology that allows to ‘read’ or ‘scan’ all your brain’s synapses is ready. Then the information contained in your neurons will be transferred to a computer and you will live again and forever. I’m sure you will have heard sometimes about this kind of projects, and I hope you have just taken it as a foolish fantasy; but, in the case you did consider it a little bit more seriously, I am going to offer you some arguments that try to show that such a project is just a fashionable myth, amusing as science-fiction, but nothing else.

The myth of mind-uploading is based on the following three premises:

1) Your mind consists of the information contained in the patterns of neuronal connections in your brain.

2) It will be possible to copy and store that information as faithfully as necessary.

3) A computer copy of that information will allow to ‘reproduce’ your mind.

I will not argue here against the first premise. For me it is an obvious truth that a perfect physical copy of your organism will be having the same feelings, thoughts, memories, etc., that you are having; so, in a sense, it is a platitude that the information contained in your organism contains all the information necessary to reproduce your mind. But from this, it does not follow that ‘the mind is information’, because the same platitude will be valid for other physiological processes (e.g., digestion), and we will not say that ‘digestion is information’, nor that a ‘perfect’ electronic copy of your organism would be digesting. Perhaps mental activity is different from digestive activity in some relevant way, or perhaps it is not. I leave that question for another time. Now I will concentrate in the two last premises.

Regarding premise 2, the main problem is perhaps not about the storing and processing capacity of computers (though we would need something like a quantum computer for that, and it is not clear that they will really be technically feasible; see Aaronson 2013), but about the necessary technology to individually scan all the neurons and synapses of a living brain to the molecular detail that would be needed in order to faithfully represent your ‘real’ neural network. On average, there are approximately one billion synapses in every cubic mm of your cortex, many of them coming from neurons that extend very far away in the brain. SciFi writers can speculate about bizarre terms with which to name technologies capable of performing that task without altering in a significant way an enormous part of the information we would like to capture… but realistic ways of reaching that goal are not on view.

The main problem, however, has to do with the third and last premise. Suppose, for the sake of the argument, that we have been able of solving the immense difficulties discussed in the previous paragraphs, and we have recorded on some computer device the data about all the synapses of our brain (perhaps also those of the rest of our nervous system?). The question is, what might we do with all those data? What we totally ignore is the ‘code’ (or the thousands of codes) that transforms, within a living brain, all that information in memories, thoughts, feelings, decisions, etc. It is like if we had a CD containing a musical recording, but no software at all to convert it into sounds. Or, perhaps more similarly, like if we had invented a machine capable of recording conversations that took place while people were painting the walls of Altamira cave, but we totally ignored the language those people were speaking. Even in a case like this, we could use our knowledge of some existing languages in order to infer through analogy some of the properties of the palaeolithic slang; but in the ‘brain code’ case, we just have nothing relevant with which to compare our data.

This is a very serious problem because if our mind ‘consists’ of anything, it is something more than having some information simply ‘stored’, like remembering, listening, desiring, deciding, etc., i.e., of carrying out some mental activity. The code problem consists, hence, in that we don’t know anything about the ‘program’ with which the brain transforms information into a process, nor do we have the slightest clue about the possible nature of such a ‘program’… nor even if the supposition that it is something like ‘a program’ is a useful one.

Furthermore, even assuming that we had solved the two gargantuan problems I have just suggested (the scan problem and the code problem), there is still an even larger obstacle: it is the fact that the mental activity of a living brain depends on a continuous interaction between the brain, its full organism, and its environment (which includes other human organisms as complex as our own). Suppose we have stored ‘your mind’ in a computer and make it ‘run’ with some appropriate software: what feelings will you have at a precise moment? (just to ask a silly question: will it be cold or hot?); what will you be deciding?; what conversations will you be having? Missing any contact with a physical reality from which to get new information and on which to act, perhaps the most such a ‘virtual’ mind could aspire to would be something similar to our nightly dreams, ‘similar’ also in being absurd and unrealistic. Many scientists even defend that the mental states and capacities of all animals not only depend on what is happening in their brains but also on peculiarities of the body and the environment together with which the former have evolved for millions of years: the mind is ‘embodied’ and ‘extended’ (see, e.g., Rowlands 2010). The Spanish philosopher Ortega y Gasset said something similar one century ago: “I am I and my circumstance”.

Perhaps this last difficulty could be surpassed providing (Matrix-like) a kind of ‘artificial circumstance’ to the mind uploaded to a computer. But, in order to make the experience of that ‘person’ a realistic one, that would demand to simulate not only her own mind, but all the universe, or at least, something as complex as a universe in which organisms could function till the latest relevant detail as they do in the real one. It is totally unclear for me, however, what kind of ‘eternal life’ would that consist in: a succession of unconnected episodes, every time the program starts to run?; an only experience that repeats again and again?; or a totally open and infinite future (though I just don’t see how this could be programmed on a computer)? In any case, would that life be your life? Would you want such a life?

One last possible solution would be that, instead of providing the mind with ‘simulated’ organism and environment, perhaps the information scanned from your brain might be ‘recorded’ on a living and ‘virgin’ brain (together with her organism), and repeat the process when this new body is going to die. But if the possibility of scanning a brain with all the necessary detail seems hugely remote, that of fabricating a new brain which is a replica neuron to neuron, synapse to synapse of another one, I honestly think this is something far from the reach of any possible technology. For neurons and synapses are, after all, the result of biological processes, of an evolutionary and developmental series of events in which many elements essentially depend on chaotic and unpredictable paths.

In a nutshell, live your life as if there were no other, because there is, and there will be, no other, neither for you nor for your circumstance.

REFERENCES

Aaronson, S., 2013, Quantum computing since Democritus. Cambridge, CUP.

Rowlands, M., 2010, The new science of the mind: from extended mind to embodied phenomenology. Cambridge (Ma., EE.UU.), MIT Press.

Seung, S., 2012, Connectome: how the brain’s wiring makes us who we are. Houghton, Boston.

5 comments

And beyond that, in a more filosophical plane, what makes you think that a copy of your brain is actually you, as the brain itself, as the circunstancies matter, its part of the process and the problem. An example, in short: you die and the copy can claim to feel like you, process like you, be you; if you make such backup when you are about to die, but you finally survive, how that backup could claim to be you if you are still alive, and whats the practical difference between that situation and one in which you actually die?

I agree with Jesus. This kind of idea flies because people who propose it know about computer and little about biology. Most of our thoughts depend on our mood. At the same time our mood depends on the level of certain hormons in our brains. How are they planning to mimic this particular biologic interaction? That alone is enough to burst the idea.

I feel there will be an issue coming due to No Cloning theorem, also. It states that we cannot exactly copy a state.

[…] Sua mente jamais será armazenada em computador […]

[…] será posible cuantificar, samplear o discretizar las propiedades complejas y emergentes de la vida en un código binario? Por ahora parece difícil, pero tal vez la computación cuántica logre sortear ese […]