Universal speed limits in thermodynamics away from equilibrium

Universal speed limits in thermodynamics away from equilibrium

Many problems in science and engineering involve understanding how quickly a physical system transitions between distinguishable states and the energetic costs of advancing at a given speed. While theories such as thermodynamics and quantum mechanics put fundamental bounds on the dynamical evolution of physical systems, the form and function of the bounds differ.

Rudolf Clausius’s version of the second law of thermodynamics, for example, is an upper bound on the heat exchange in traversing equilibrium thermodynamics states—an inequality that limits the efficiency of heat engines without an explicit notion of time or fluctuations. By contrast, the time–energy uncertainty relation in quantum mechanics presented in 1945 by Leonid Mandelstam and Igor Tamm is a limit on the speed at which quantum systems can evolve between two distinguishable quantum states.

Given this important, and long-standing, contrast between these two pillars of physics, we explore thermodynamic bounds that are analogous to those in quantum mechanics, bounds that are independent of the system dynamics and set limits on the speed of energy and entropy exchange.

Thermodynamic uncertainty relations have been found where fluctuations in dynamical currents are bounded by the entropy production rate. These relations apply to small systems and are part of stochastic thermodynamics, a framework in which heat, work and entropy can be treated at the level of individual, fluctuating trajectories. Recent work has begun to suggest connections to the physics of information, information theory and information geometry.

In parallel to these discoveries, there have been advances in quantum-mechanical uncertainty relations or speed limits that constrain the speed at which dynamical variables evolve. These quantum speed limits, which also have information-theoretic forms, have recently been generalized to open quantum systems embedded in an environment, paving the way for their application in the classical domain.

But, while there has been rapid progress on thermodynamic uncertainty relations, it remains to be seen whether there are speed limits in thermodynamics whose generality rivals those in quantum mechanics.

What governs the speed at which heat, work and entropy are exchanged between a system and its surroundings? Is there a universal quantity that bounds the speed at which thermodynamic observables evolve away from equilibrium? Now, motivated by these questions, a team of researchers has derived 1 a family of limits to the speed with which a system can pass between distinguishable non-equilibrium states and the heat, work and entropy exchanged in the process.

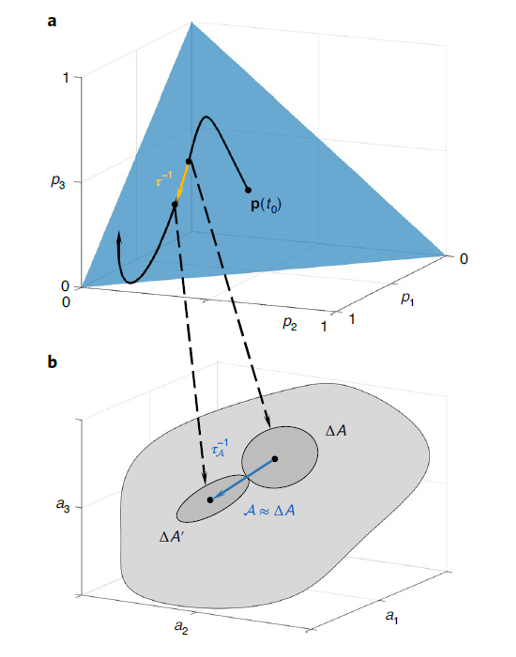

According to thermodynamics, every natural process faces the physical principle that structure formation or useful work production, at a particular speed, comes at a cost: entropy production, energy dissipated as heat, and wasted free energy. In order to study the restrictions to these costs, the researchers consider a generic classical, physical system operating irreversibly, out of thermodynamic equilibrium. The stimulus for the time evolution of this physical system can be the removal of a constraint or the manipulation of an experimental control parameter, such as temperature or volume. As is common in stochastic thermodynamics, they adopt a mesoscopic description and take the system to have a finite number of configurations, each one with an initial probability. As currents in energy and matter cause the system to evolve, the probability distribution will generally differ from that of a Gibbsian ensemble. The working assumption is that the dynamical evolution smoothly transforms the probability of each state at a given time with a rate which is time-dependent itself.

The researchers find that these thermodynamic costs are restricted by fluctuations and satisfy a time–information uncertainty relation. The uncertainty relations derived for the flux of heat, entropy and work (both dissipated and resulting from material transport or chemical reactions) demonstrate that the timescales of their dynamical fluctuations away from equilibrium are all bounded by the fluctuations in information rates. In other words, rates of energy and entropy exchange are subject to a speed limit—a time–information uncertainty relation— imposed by the rates of change in the information content of the system. Therefore, while away from equilibrium, natural processes must trade speed for thermodynamic costs.

Interestingly, all these uncertainty relations have a mathematical form that mirrors the Mandelstam–Tamm version of the time–energy uncertainty relation in quantum mechanics.

Because the developed formalism requires few details about the model system or the experimental conditions, the researchers expect it to be applicable to a broad range of physical and (bio) chemical systems. With no assumption about the underlying model dynamics or external driving protocol, it could also be applied to any non-equilibrium process with a differentiable probability.

Author: César Tomé López is a science writer and the editor of Mapping Ignorance.

Disclaimer: Parts of this article may be copied verbatim or almost verbatim from the referenced research paper.

References

- Schuyler B. Nicholson, Luis Pedro García-Pintos, Adolfo del Campo & Jason R. Green (2020) Time–information uncertainty relations in thermodynamics Nature Physics doi: 10.1038/s41567-020-0981-y ↩