To filter or not to filter, that’s the question…in 3D scene reconstruction

To filter or not to filter, that’s the question…in 3D scene reconstruction

The history of science is full of examples of researchers who arrived at the same discovery independently. Unfortunately, this still happens in this information age, thus technological deficiencies are not to blame for this lack of communication between different research communities. Odd as it may sound from outside, I hold that much of this isolation between groups is a side effect of the high level of specialization achieved by modern research, in which a specialized terminology is adopted in which identical ideas may be named very differently in different fields, hindering the mutual exchange of ideas.

Eventually, two of these fields may find how much they had in common and will then learn the best from each other. Such an exciting situation has occurred during the last few years in the high-tech fields of mobile robotics and computer vision, after bringing new life to the well-established topographic and photogrammetric methods from the middle of the 20th century, when the world was still witnessing the very first electronic computers.

All those fields had devoted huge efforts to solving the problem of reconstructing the shape and volume of 3D objects from pictures or video footage, but each from very different perspectives. As a result, the same problem has been named, during the last seven decades, in at least three different ways: Bundle Adjustment(in photogrammetry), Structure from Motion(in computer vision) and Simultaneous Localization and Mapping(in mobile robotics).

The paper at hand today, entitled “Real-time monocular SLAM: Why filter?” and presented in the influential conference ICRA 2010 by Hauke Strasdat et al. 1, perfectly illustrates the dilemma faced by researchers in 3D scene reconstruction. To put things in context, it shall be noted that the work is coauthored by Andrew J. Davison (Imperial College London, UK), renowned for introducing probabilistic filtering techniques into scene reconstruction from camera images in the early 2000s. Among other applications, such an advance led to an immense step forward in augmented reality, which is now feasible even on smartphones. And yet, ten years later we have this new work wondering whether filtering was really the best idea. I’ll try to explain next the reasons for such a surprising turn.

The problem of reconstructing a 3D scene or object can be posed as a problem of estimation, in the probabilistic sense: given a set of evidences (i.e. images), what is the most likely structure of the 3D objects out there? One of the most powerful tools for modeling and understanding estimation problems are graphical models, in which nodes stand for unknown variables or observed data and edges between them symbolize some kind of relationship.

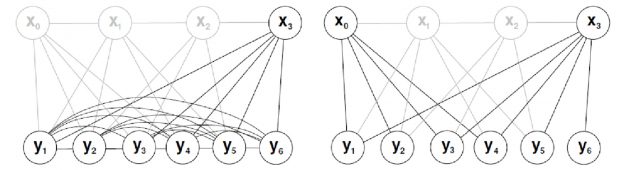

Most approaches to 3D scene reconstruction can be ultimately represented as a graphical model where the unknowns are camera locations (the cameras pointing downwards in figure 1) and the placement of world points (the points labeled as li). By means of images we make observations (denoted as lines), which in practice are pixel coordinates of some world point in the image grabbed from some camera location. Therefore, observations are edges in the problem graph since they link world points together with camera poses.

For reconstructing a scene we normally capture a sequence of images as the camera moves around, thus it is natural to assume that each camera location should be close to the previous one. In our graphs, that means that edges are added between each world point and a camara location. Also, edges exist between camera locations from which we observe the same world points, since they introduce a geometrical constraint in the relative position of the cameras. All this leads to the graphical models shown in the figure 2. Note that there exist two alternative representations: directed graphs (edges have a sense of direction) and undirected graphs (edges do not have an implicit direction). The former are called Bayesian Networks and the latter Markov Random Fields, but for their differences are not relevant for the present discussion.

Two fundamental methods exist to find out the unknowns from these graphs. The first one is Bayesian filtering. The “Bayesian” comes from its probabilistic foundations, which are based on the Bayes rule. The “filtering” is for its principle of working: we process images sequentially, one by one as they are grabbed. After integrating one new image, its information is fused with all previous data to yield a probabilistic estimation of some unknowns. Notice the “some”. In a basic Bayes filter, we obtain an estimation of the position for all the world points but only the location of the last camera pose. The alternative second method is least-squares optimization. It can be demonstrated to be equivalent to Bayesian filtering if all the uncertainties follow Gaussian distributions, but it normally estimates the entire set of unknowns: all world points and all camera poses.

The pioneering works of Andrew Davison in the early 2000s with Bayesian filtering applied to 3D scene reconstruction represented a milestone for both the computer vision and robotics communities, which quickly adopted the filtering approach as the key to results as cools as these ones:

Despite the great results, sequential filtering has some shortcomings regarding its computational efficiency. If we try to build models of large environments using these techniques we will soon notice that the larger the model becomes, the longer it takes to fuse a new image with all the previous data. Building a map of 1000 world points costs 1000 times more CPU time than building a map with only 100 points. In computer science, we say that such an algorithm has a cubic computational complexity, i.e. its costs grows as O(N3) with N the number of points.

In contrast, least-squares optimization approaches, when implemented properly, take advantage of what we call the sparsity of the problem and typically achieve a linear cost O(N) with the number of world points, allowing us to build larger models much faster than filtering. By sparsity we refer to the loose coupling that exists between all the unknowns in the problem, if we try to estimate all of them simultaneously. Physically, this means that each camera only observes a reduced number of world points, and that each world point only appears in a few images. It can be then demonstrated that reconstructing a 3D model following the graphical models shown above becomes solving a linear system of the form:

A x = b

when the x are the unknowns, b is a vector with pixel coordinates and Ais a symmetric matrix with a very sparse structure, similar to that of the figure:

In the work by Hauke Strasdat et al., the authors quantitative investigate under which conditions is really more advantageous to employ filtering or optimization. And the published results were quite conclusive: unless you have very limited computational resources, it is more efficient to employ the CPU time in least-squares optimization methods rather than in filtering. Efficiency here means that we approach the real solution of the 3D model faster for the same amount of CPU time spent in the computations. Filtering still may be useful if we need fast estimations in real-time, at the cost of not being the more accurate results that we could have obtained with optimization.

The moral in this story is that filtering methods became extremely popular during the early 2000s, in spite of that fact that the “more recent” and more efficient least-squares optimization approaches… were already proposed in photogrammetry articles written in the 50s of the last century!

We have had to wait until the recent development of open source programming libraries aimed at solving sparse systems of equations for rediscovering those “good old” optimization methods. Nowadays, even Google Street View heavily relies on those “old” algorithms for reconstructing the paths of their cars and the 3D location of interesting points in the streets of thousands of cities around the World.

References

- Strasdat, H. and Montiel, JMM and Davison, A.J. Real-time monocular SLAM: Why filter?. IEEE International Conference on Robotics and Automation (ICRA), pp. 2657-2664, DOI: 10.1109/ROBOT.2010.5509636, 2010. ↩

5 comments

Fantastic work! Very interesting topic, and very well explained.

Thank you

A simple question (I hope): if I understand correctly, when using filtering techniques, your past measures are considered within your system state, and as can not be measured, they introduce a drift error in the pose calculation. It is something like that you are doing just a local optimization. This drift can be corrected if you revisit previously visited places, and measure features that you had previously measured, correcting in this way the estimation error.

In contrast least-squares or keyframe based methods really perform a global optimization. Or at least, an optimization over all the saved keyframes, that should have to consider the whole workspace.

In such way, is it correct to consider that optimization-based methods do not suffer from drift?

Thanks!

Hi Belion,

Good insight! The “drift” to which you refer may actually happen in all estimation methods (filters and batch). The unique way to correct it is by “closing a loop”, that is, providing the estimator with evidence that reveals the drift so the errors are somehow propagated and corrections applied.

The difference is that filters must encode all the information required to correct the entire state in the cross-covariances of the last estimation, making matrices much denser than least-squares/batch methods.

Hope it helps!

Congratulations for this post. Good concept dissemination without loosing accurate vocabulary. Thanks !

[…] y “redescubrir la rueda”. De hecho en mi campo he sido testigo de algo parecido, como ya conté aquí, pero lo asombroso de la fórmula de Tai es la obviedad del método redescubierto. En mi opinión […]