Disinformation about vaccines on Twitter

Author: Martha Villabona works at Centro Nacional de Innovación e Investigación Educativa (CNIIE) of the Spanish Ministry of Education and Vocational Training, where she coordinates the area of multiple literacies.

The article Weaponized Health Communication: Twitter Bots and Russian Trolls Amplify the Vaccine Debate, published in 2018 1, affirms that, despite the great potential of social networks to disseminate objective information, they are often abused in order to disseminate content that may harm health. A direct consequence of the exposure of social network users to negative information may be the reduction of vaccination rates. Precisely, people who reject vaccination have a very significant presence in the networks. This can increase the doubts of many families who believe more what the Internet says than what their doctor can tell them.

According to the authors of the article, public health research has focused more on the fight against anti-vaccine content online than on the agents that produce and promote this content, such as bots and trolls.

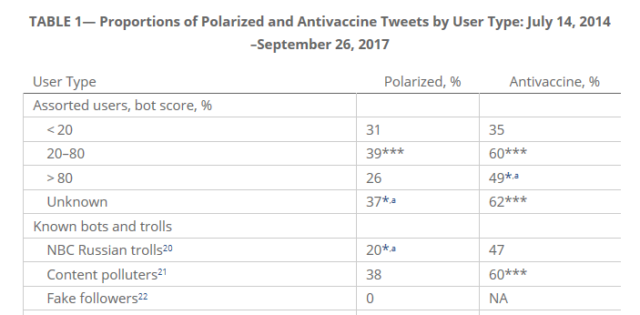

The appearance of diseases such as measles or mumps makes it necessary to fight against the dissemination of false information in social networks. Much of this disinformation about health can be promulgated by “bots” – accounts that automate the promotion of content – and “trolls” – individuals who misrepresent their identities with the intention of promoting discord. The type of strategies to misinform online using both is varied, but the most effective is the amplification that seeks to create false consensus impressions through the use of robots and trolls. The study analyzes the role they play in the dissemination of content related to vaccination from the reach of 1 793 690 tweets collected from July 14, 2014 to September 26, 2017 that issued bots and trolls on Twitter.

First, the researchers examined whether Twitter robots and trolls publish tweets about vaccines more frequently than average Twitter users to know the impact of polarization and anti-vaccine messages. Then they examined the rates with which each type of account tweeted messages in favor of the vaccine, against or was neutral. Finally, they identified the hashtag #VaccinateUS used only by Russian trolls and used qualitative methods to describe its content. This hashtag was created to promote confrontation between those in favor or against vaccines and was used as a political problem. The #VaccinateUS tweets were uniquely identified with the accounts of Russian trolls linked to the Internet Research Agency, a company backed by the Russian government that specializes in online influence operations.

One of the results of the study was that 93% of tweets about vaccines are generated by bot accounts that preferentially tweet false information about vaccines.

Another aspect that highlights the study is that the messages in which the hashtag appears are written with grammatical errors and irregular phrases. However, VaccinateUS messages contain fewer spelling errors and do not present links to external content, strange comments or images.

Likewise, it is interesting to analyze the thematic content of the messages with that hashtag. The issuers of these tweets are aware of the arguments for and against vaccination, but there are differences in the formulation of the messages. As stated in the study, the authors of VacccinateUS messages tend to link messages explicitly with US policy and often use emotional appeals to “freedom”, “democracy” and “constitutional rights” on “choice of parents” and specific legislation related to the vaccine.

It is also striking that antivaccine messages with #VaccinateUS often refer to conspiracy theories. These theories tend to target a variety of guilty collectives such as specific government agencies or secret organizations. However, VaccinateUS messages are directed almost exclusively to the government of the United States. In addition, these messages included arguments related to racial or religious aspects, issues that provoke confrontation in the United States.

In relation to Russian trolls and Twitter bots, they publish more content about vaccination than the average user. The strategy they use is that of the repetitive promotion of discord on controversial issues, in this way they normalize a topic for the citizen to question the scientific consensus on the efficacy of vaccines.

We must also take into account those that spread malware, unsolicited commercial content and other disruptive materials that generally violate the terms of use of Twitter. They post messages against vaccines 75% more frequently than the average Twitter user. This suggests that opponents of vaccines can spread messages using bot networks designed primarily for marketing. Conversely, spambots, which can be easily recognized as non-human, are less likely to promote an anti-vaccine campaign since their goal is to increase the number of clicks through the dissemination of relevant content to achieve greater economic income.

The conclusion of the study is that most of the anti-vaccine content is generated by bots and trolls accounts and suggests that there is more research for health professionals to combat the messages of the bots and especially the trolls that generate the debate and discord.

References

- David A. Broniatowski et al. (2018) “Weaponized Health Communication: Twitter Bots and Russian Trolls Amplify the Vaccine Debate”, American Journal of Public Health 108, no. 10: pp. 1378-1384. DOI: 10.2105/AJPH.2018.304567 ↩

1 comment

People’s ignorance is the new energy resource of the cold war. Social media have connected medieval minds creating a global unconsciousness.